Flow Variation A/B Testing

Learn how to perform an A/B test to measure the effectiveness of multiple versions of an experience.

Table of Contents

Using Appcues to manage your customer experiences allows you to quickly experiment with changes in Flow content in order to optimize your conversion metrics.

With Flow variation testing, you can perform an A/B test to measure the effectiveness of multiple versions of an experience in parallel. For example, are your users more likely to engage with a slideout containing a gif or a static photo? Do your users like a tooltip tour, or would they prefer one modal containing all content? A/B testing will help you find the answer.

Setting up a variation test

1. Start by creating two or more Flows.

Before you set up your A/B test, make sure that your target audience for the Flows is large enough to get statistically significant results. Generally, the larger the group the better, but best practice is to have at least 500 users in each group. There are several online tools you can use to measure your test’s statistical significance.

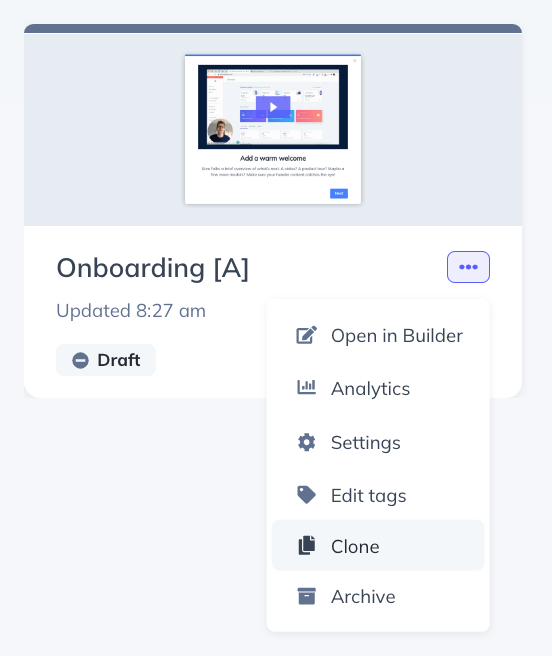

If you’ll be testing slight variations of the same Flow, you can save time by creating the first Flow, cloning it from your Flows list, and making edits to the cloned version in the Appcues Builder.

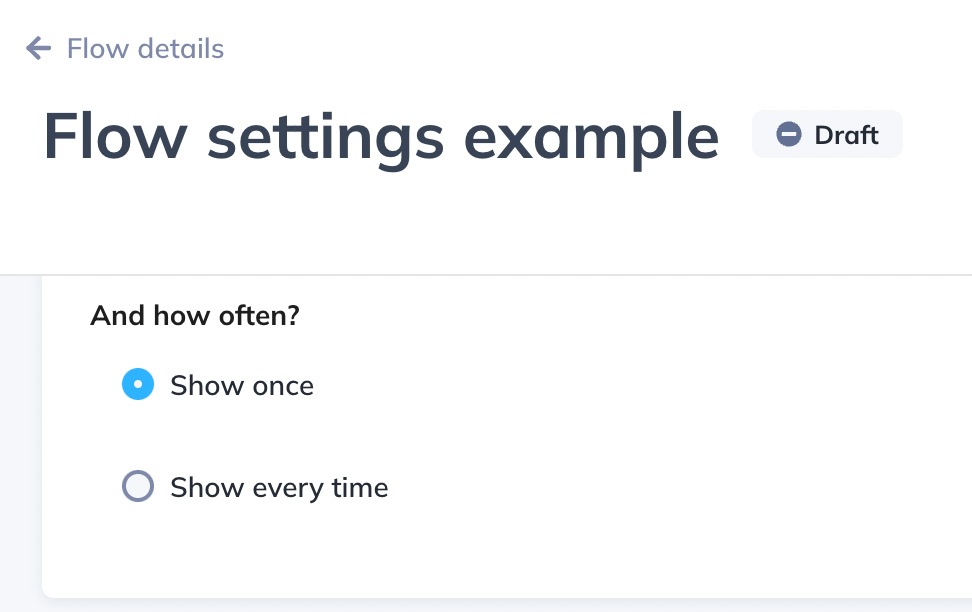

For proper variation testing, set the frequency of each Flow to “Show once”.

2. Create Segments for each Flow variation

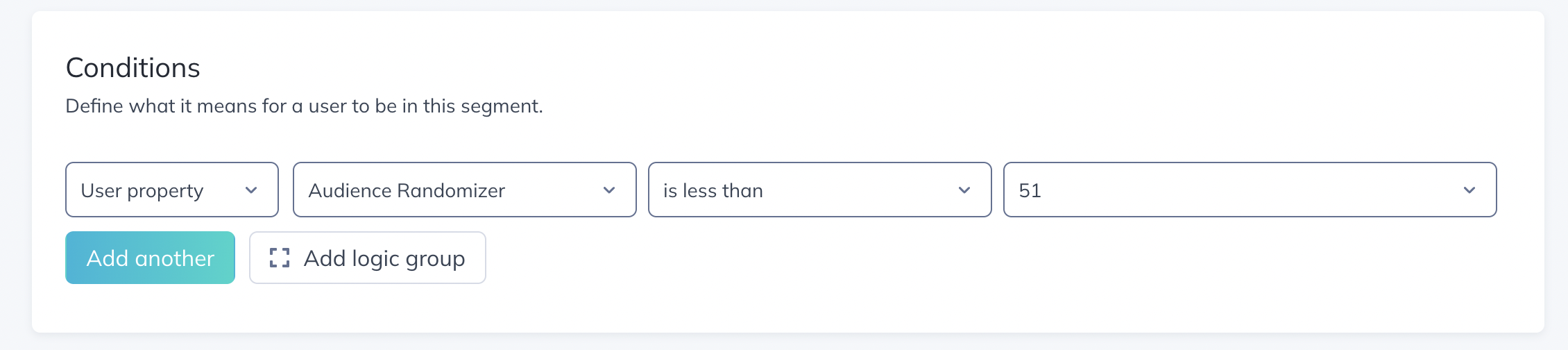

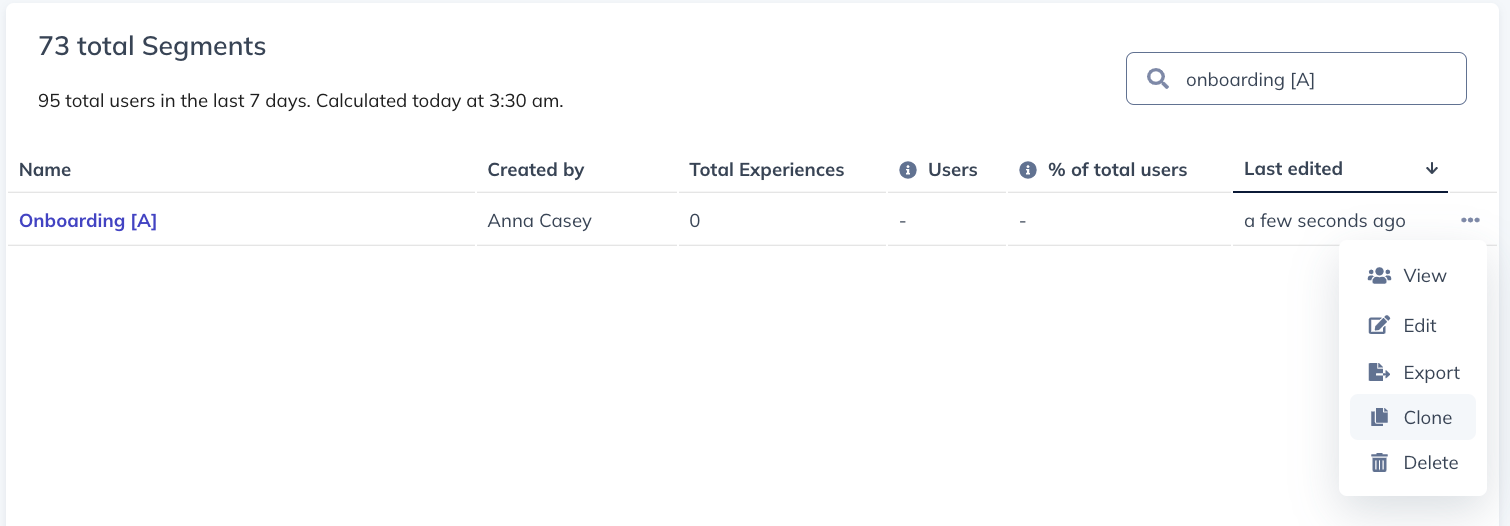

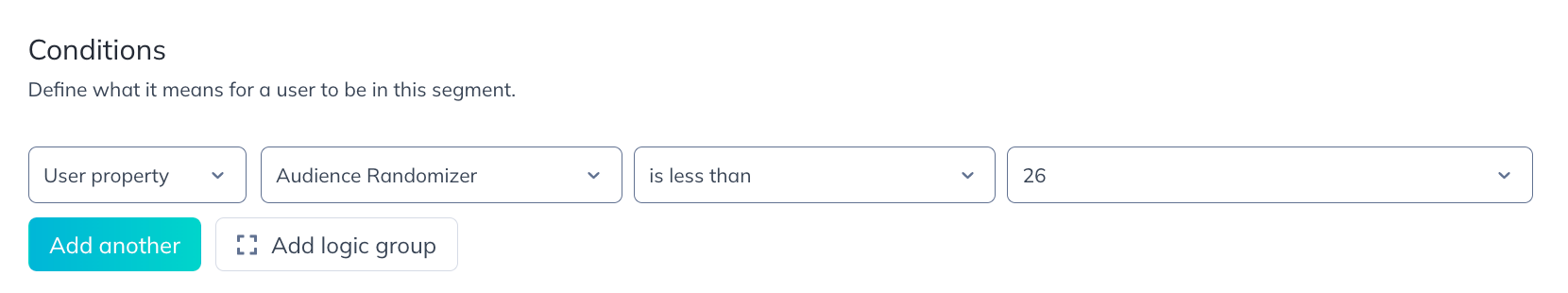

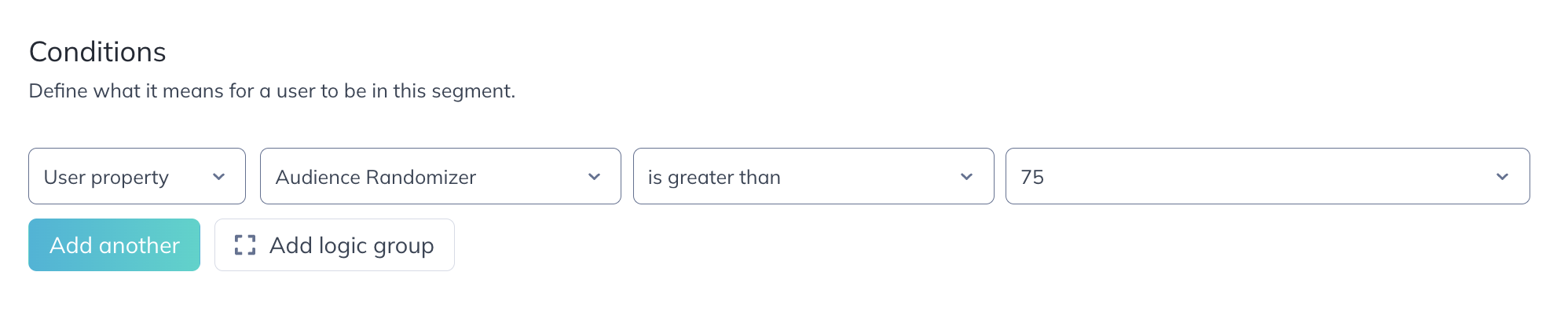

Navigate to the Segments page and “Create a Segment”, using the “Audience Randomizer” auto-property to define a percentage of users to be included in the Segment.

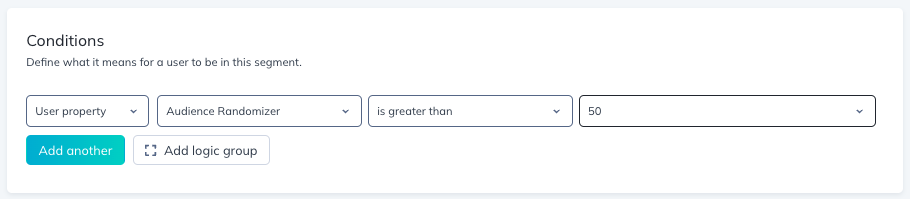

Each user will be randomly assigned a number from 1-100 upon first login, so targeting a Flow to users with an Audience Randomizer number of less than 51 will include all users assigned numbers 1-50. Below we're creating a segment to target Group A.

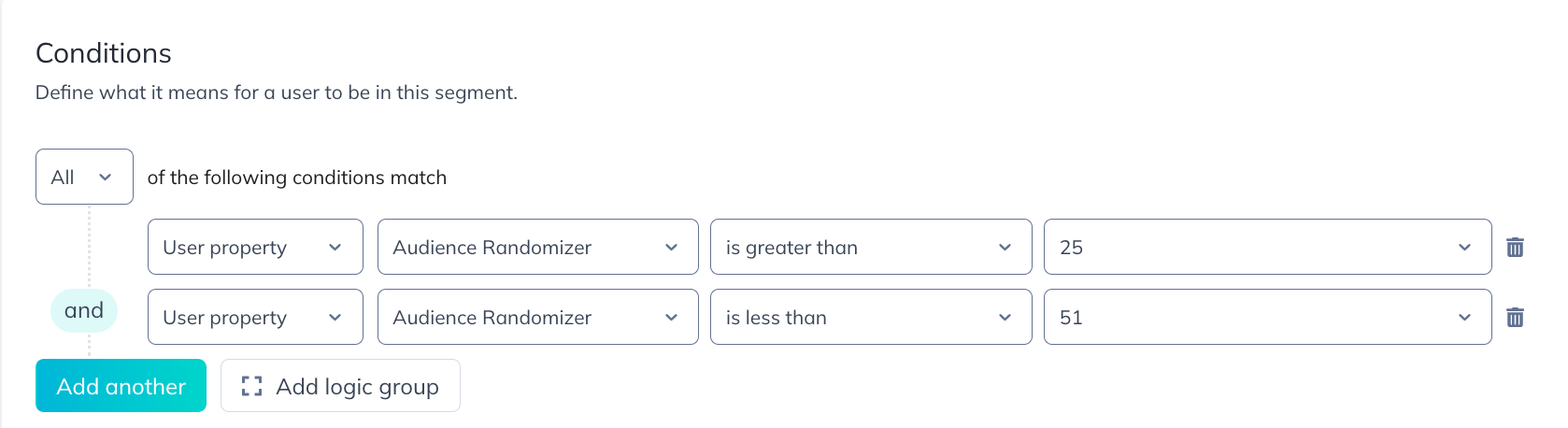

To create your Group B, clone your Segment, and update the Segment name and “Audience Randomizer” attribute in the cloned version. Make sure all other audience segmentation criteria are consistent across your test audiences.

For Group B, we'll make sure that Audience Randomizer is set to users with a number greater than 50, which will include all users assigned to numbers 51-100.

NOTE: The Audience Randomizer property is a number from 1 to 100 randomly assigned to users upon their first login. As this is a random assignation for each user, it is possible for more users to have an Audience Randomizer less than 50 than there are users with a number greater than 50 (or visa versa), meaning there is no expectation that these two groups will be completely evenly split. This may be a concern if working with fewer users in your account, as the difference in split will statistically decrease as the group sizes increase.

Because this is a static property that does not change once assigned, you may also want to adjust the exact numbers being targeted if you are planning on doing multiple A/B flow variations to help ensure different groups of users are eligible for the A or B variation. For example, the second time you create an A/B flow, you might target the A version to users with an Audience Randomizer greater than 25 and less than 75, with the B flow targeting the remaining users.

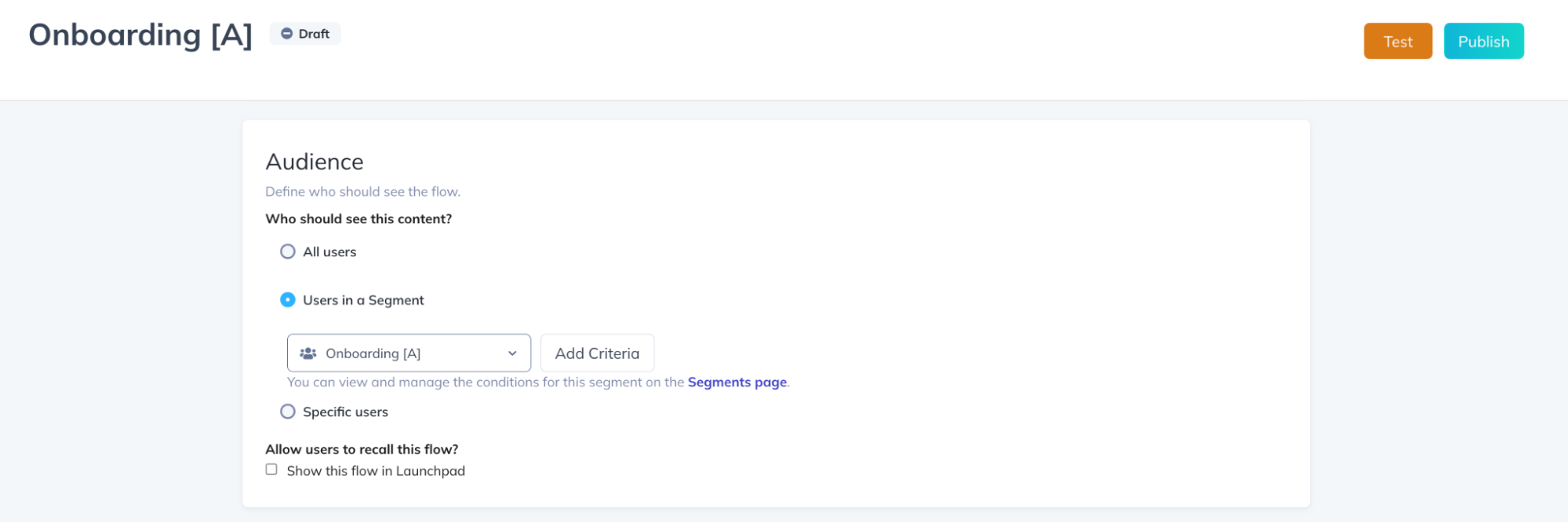

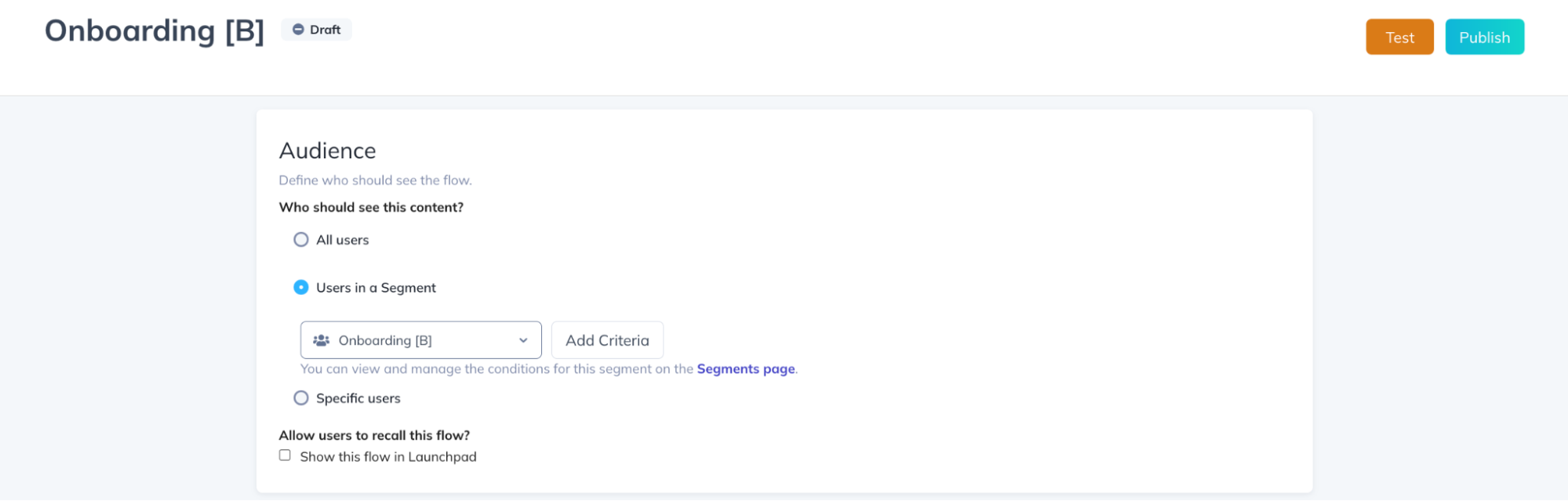

3. Apply your Segments to each Flow variation.

Make sure all other Flow targeting criteria are consistent across each test Flow; e.g. ‘show once’ vs ‘show every time’, page targeting, and Flow priority.

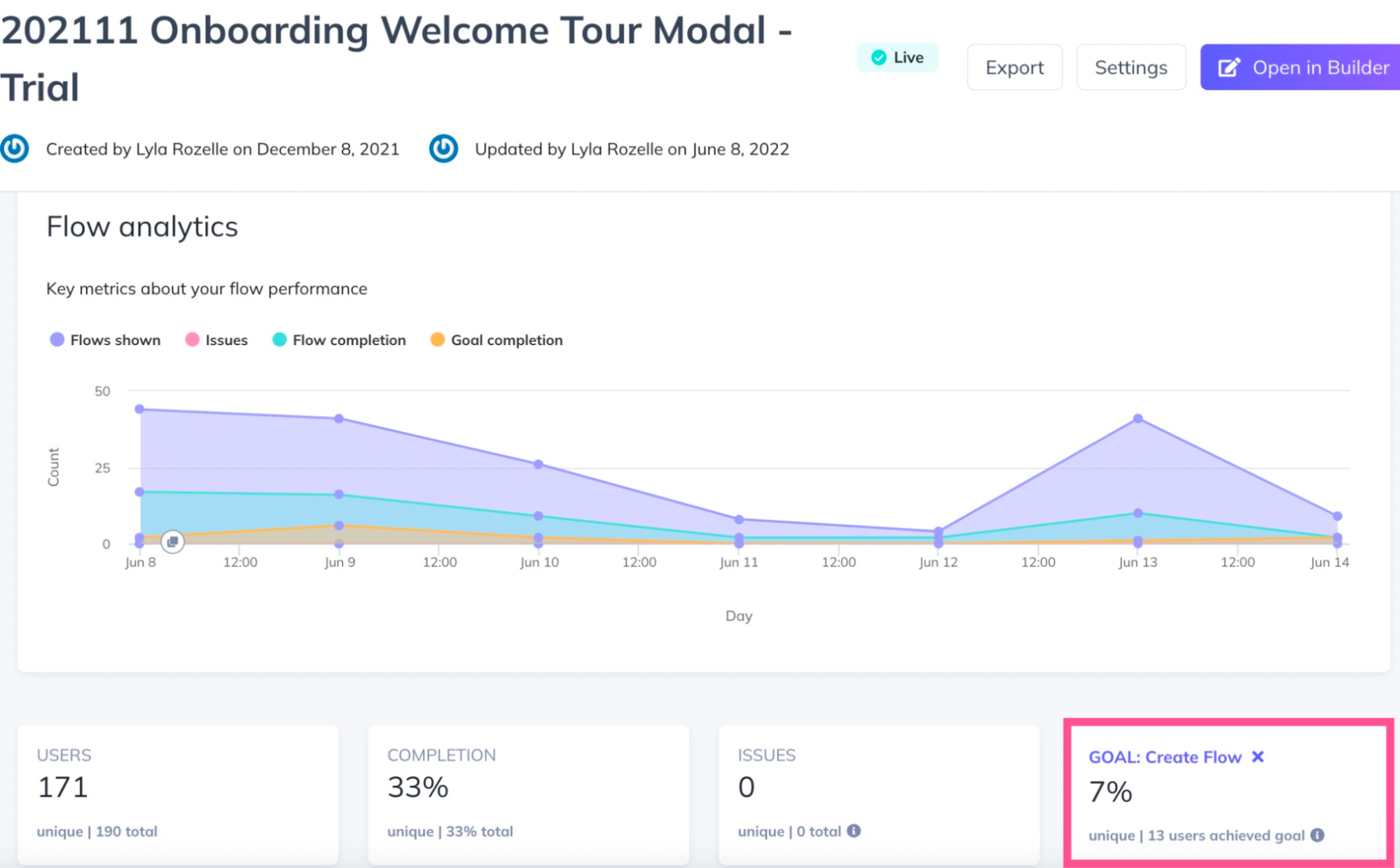

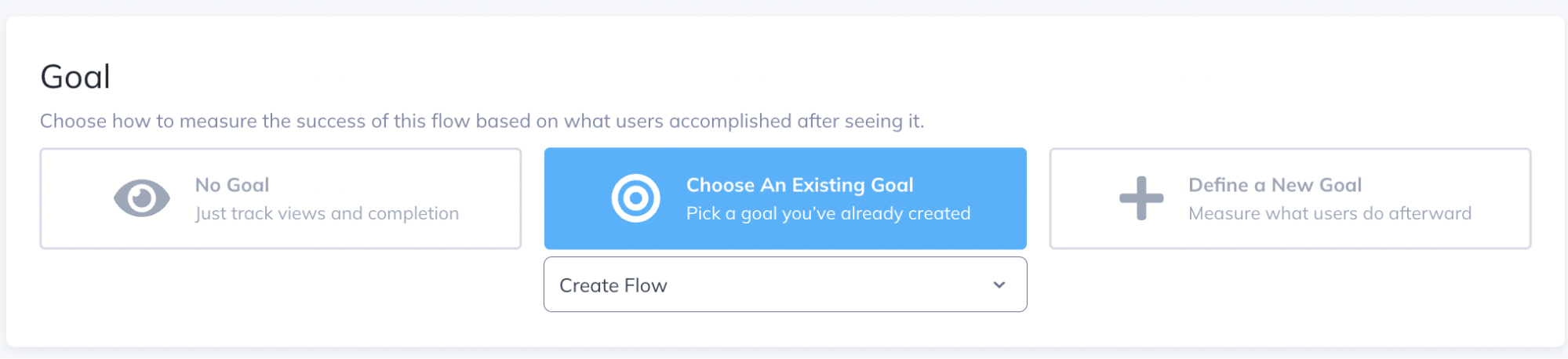

Add the Goal you want to measure for conversion to each Flow. For more information on creating and managing Goals, check out this help doc.

Note: it’s always best to measure that your Flows are driving a specific action for the users on your app (example: completed trial signup). However, you could take a more basic approach and simply measure the completion rate of a Flow. In this case, you could not need to add a Goal to your Flows, since completion rate is always tracked.

Testing more than two variations

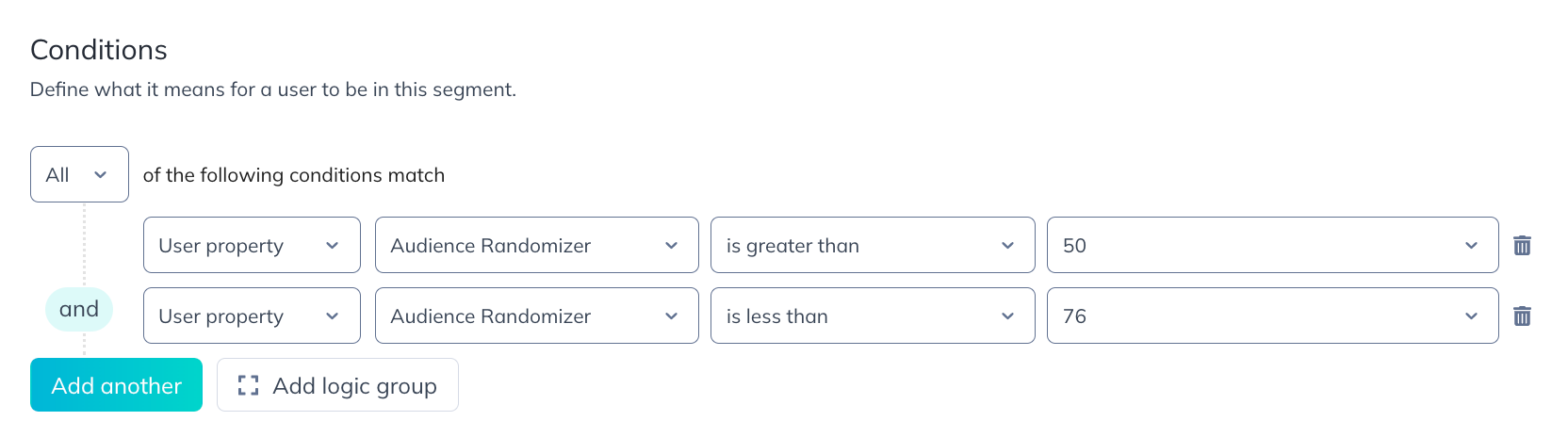

If you’ll be testing more than two variations, create the same number of audience segments as Flows, and split the Audience Randomizer evenly across each.

For example, if you’re doing an A/B/C/D test, you should have four audience segments that look like this:

Measuring results

Once you’ve set a Goal, it will be tracked on the analytics page of a Flow. Compare the results of your Flows against each other to determine which Flow performed the best.